DeepSeek AI has challenged this perception. As talked about, SemiAnalysis estimates that DeepSeek has spent over $500 million on Nvidia chips. Many experts doubt the company’s claim that its subtle mannequin cost just $5.6 million to develop. DeepSeek’s APIs price a lot less than OpenAI’s APIs. Many would flock to DeepSeek’s APIs if they provide similar efficiency as OpenAI’s models at more affordable prices. The corporate can do that by releasing extra advanced models that considerably surpass DeepSeek’s performance or by decreasing the costs of current fashions to retain its user base. It raises questions about AI development costs and also have gained so much popularity in China. This API prices money to make use of, just like ChatGPT and other distinguished fashions charge money for API entry. I have been studying about China and some of the companies in China, one in particular coming up with a quicker method of AI and much cheaper technique, and that is good because you do not have to spend as much money. One can use completely different specialists than gaussian distributions. Nvidia is considered one of the principle companies affected by DeepSeek’s launch. US firms invest billions in AI growth and use advanced computer chips.

DeepSeek AI has challenged this perception. As talked about, SemiAnalysis estimates that DeepSeek has spent over $500 million on Nvidia chips. Many experts doubt the company’s claim that its subtle mannequin cost just $5.6 million to develop. DeepSeek’s APIs price a lot less than OpenAI’s APIs. Many would flock to DeepSeek’s APIs if they provide similar efficiency as OpenAI’s models at more affordable prices. The corporate can do that by releasing extra advanced models that considerably surpass DeepSeek’s performance or by decreasing the costs of current fashions to retain its user base. It raises questions about AI development costs and also have gained so much popularity in China. This API prices money to make use of, just like ChatGPT and other distinguished fashions charge money for API entry. I have been studying about China and some of the companies in China, one in particular coming up with a quicker method of AI and much cheaper technique, and that is good because you do not have to spend as much money. One can use completely different specialists than gaussian distributions. Nvidia is considered one of the principle companies affected by DeepSeek’s launch. US firms invest billions in AI growth and use advanced computer chips.

But Wall Street banking giant Citi cautioned that while DeepSeek might challenge the dominant positions of American firms akin to OpenAI, issues confronted by Chinese firms may hamper their development. DeepSeek has spurred considerations that AI corporations won’t want as many Nvidia H100 chips as anticipated to construct their models. Hence, startups like CoreWeave and Vultr have constructed formidable businesses by renting H100 GPUs to this cohort. App builders have little loyalty in the AI sector, given the dimensions they deal with. Given the estimates, demand for Nvidia H100 GPUs likely won’t reduce soon. H100 GPUs have become pricey and difficult for small expertise companies and researchers to acquire. Wiz claims to have gained full operational control of the database that belongs to DeepSeek inside minutes. Hungarian National High-School Exam: Consistent with Grok-1, we now have evaluated the mannequin's mathematical capabilities using the Hungarian National High school Exam. It offers real-time, actionable insights into critical, time-sensitive selections using pure language search. Core parts of Deep Seek AI tool deepseek ai china: enjoy a person-friendly panel that delivers quick insights on demand. Potential for Misuse: Any powerful AI device can be misused for malicious purposes, such as producing misinformation or creating deepfakes.

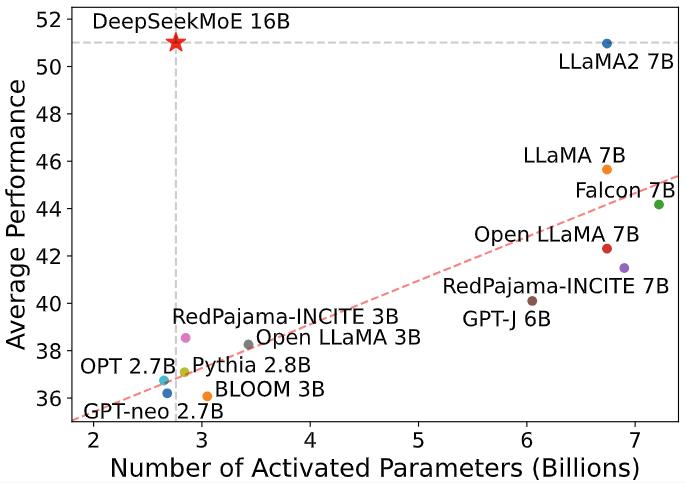

Interested builders can enroll on the DeepSeek Open Platform, create API keys, and follow the on-display screen directions and documentation to integrate their desired API. Developers can entry and integrate DeepSeek’s APIs into their websites and apps. This modification would be extra pronounced for small app developers with restricted budgets. It developed a robust model with limited sources. DeepSeek AI’s mannequin was developed with limited sources. Within the open-weight category, I feel MOEs have been first popularised at the top of last year with Mistral’s Mixtral model after which extra lately with DeepSeek v2 and v3. He previously built companies utilizing AI for trading and then his interest in AI comes from curiosity. But then it form of started stalling, or at the least not getting higher with the identical oomph it did at first. The dataset is constructed by first prompting GPT-four to generate atomic and executable operate updates throughout 54 features from 7 diverse Python packages. To get an intuition for routing collapse, consider making an attempt to practice a mannequin reminiscent of GPT-four with 16 consultants in complete and a pair of consultants active per token. The complete 671B model is just too powerful for a single Pc; you’ll want a cluster of Nvidia H800 or H100 GPUs to run it comfortably.

You can access seven variants of R1 via Ollama: 1.5B, 7B, 8B, 14B, 32B, 70B, and 671B. The B stands for "billion," figuring out the variety of parameters in each variant. The command will instantly obtain and launch the R1 8B variant on your Pc. We advise running the 8B variant on your local Pc, as this compressed model most closely fits high-spec PCs with Nvidia GPUs. The news that TSMC was mass-producing AI chips on behalf of Huawei reveals that Nvidia was not fighting against China’s chip trade but relatively the mixed efforts of China (Huawei’s Ascend 910B and 910C chip designs), Taiwan (Ascend chip manufacturing and CoWoS superior packaging), and South Korea (HBM chip manufacturing). The US tries to restrict China’s AI development. Kanerika’s AI-driven methods are designed to streamline operations, allow data-backed resolution-making, and uncover new progress alternatives. U.S. tech giants are constructing data centers with specialised A.I. With its debut the whole tech world is in shock. free deepseek is a brand new synthetic intelligence chatbot that’s sending shock waves by way of Wall Street, Silicon Valley and Washington.